Penny Xu

Finding eigenvalues proof explained

2019-07-26

Does hearing eigenvalue or eigenvector trigger you? Have you ever solved for one, but didn't know what you were solving? Have you blindlessly used this equation:

to solve for some eigenvalues, but never knew where the equation came from? Well, this post is clearly for you!

I was first introduced to these terms in my differential equations class. I remember memorizing the equations and passing my exams, thinking that it would be my last time seeing these eigen terms. However, I realized that they still come up everywhere, such as in transformations in Computer Graphics and analyzing data in Big Data... one literally cannot avoid them. So here is my take on explaining the equation above, in probably a nonorthodox way.

On a side note, eigenvalue and eigenvector always sounded intimidating for me, and I think it's because eigenvalue/eigenvector combines german and english, so it always sounded like a proper noun with no meaning. But actually, eigen means special or unique in German. So all this time, you have been solving these special little snow-, I mean special values and special vectors.

Definition:

- is a matrix

- is the eigenvector

- is the eigenvalue

Reading this equation from left to right: when you apply a matrix to a vector , the resulting vector is equal to some scalar times the same vector . In other words, after a transformation by matrix on a space, say in , out of all the vectors in the space that are transformed by matrix , there are some special vectors called eigenvectors that do not change direction, but are rather made longer or shorter by a scalar value called the eigenvalue.

Proof

We all know that the equation is used to solve for the eigenvalue, and then using that to solve the eigenvectors. But, where did this equation come from? Well here is the proof I think makes sense intuitively, but keep in mind that it might not be a mathematically rigorous proof with all its technicalities...

This is the definition that we are starting with. As explained above, the left hand side produces a vector, and so does the right hand side. Keep in mind that is non-trivial, which means that it does not equal the zero vector, which we know is always a solution.

Simply subtracting the right hand side over. Keep in mind that the difference of two vectors is a vector. So this is why the right hand side is the zero vector. It is basically a vector of zero length. So in , the zero vector .

I added the identity matrix as , so adding it doesn't really change anything, except now I can factor out . Keep in mind that has the same dimension as .

Just factoring.

Since is just a matrix, let's call it matrix for now. So this step is substituting as . This will help us understand the next step better. Let's read the above equation from left to right. When we apply matrix to vector , the resulting vector is the zero vector . In other words, the matrix when applied as a transformation, collapses the dimension of the , or in technical terms, is an element of the Null Space of . The Null Space is all the vectors, say for example in , that are transformed into the zero vector after applying the transformation to .

Since the NULL Space of M is not empty, We can say that has linearly dependent columns. Keep in mind the columns are the basis vectors. We know that is linearly dependent because we assume that is non-trivial, so it cannot be the zero vector. We can see that after matrix is applied to some space, dimension is lost, or in technical terms, rank is reduced.

Ok so here is the crucial portion. First let's understand what taking a determinant of a matrix means. The determinant of a matrix M is a scalar value that describes by what factor the "area" scales after transformation M is applied. The "area" is defined by the basis vectors of the original vector space, if we are in . If we are in , then it would be the delta "volume" defined by the basis vectors. So the determinant of a matrix of tells me how the basis vectors of the space are changing after is applied. For example, the determinant of a matrix is 2, if the "area" changes by a factor of 2.

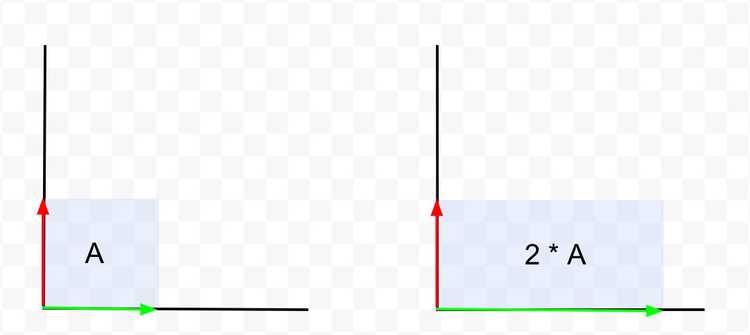

example:

Left graph is the original space defined by the green and the red basis vectors. Right graph is the space after applying transformation . You can see that the area scales by a factor of 2 after applying , and you can see that the determinant of is also 2. It is not a coincidence.

=

So when the determinant of a matrix is zero, then it means that the area or the volume becomes zero, which means that when the transformation matrix is applied, the basis vectors of the space overlaps, so that the "area" they trace out is zero. This also means that transformation collapses the dimensions of the space, since the dimension is defined by the number of basis vectors.

So if the determinant of a matrix is zero, then this tells me that the columns (basis vectors) of is linearly dependent, and vise versa, since the basis vectors overlaps and the space is collapsed. It is also why we check to see if a matrix is invertible, by checking if the determinant is zero. When the basis vectors overlaps, we cannot know the inverse transformation matrix that will bring us back to the original vector space.

Since we know that is linearly dependent from , then we can finally make the connection that .

Simply substituting back from .